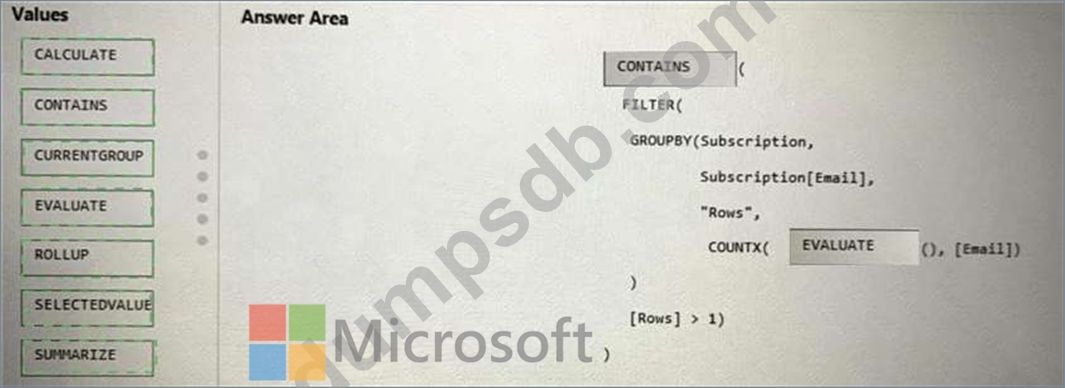

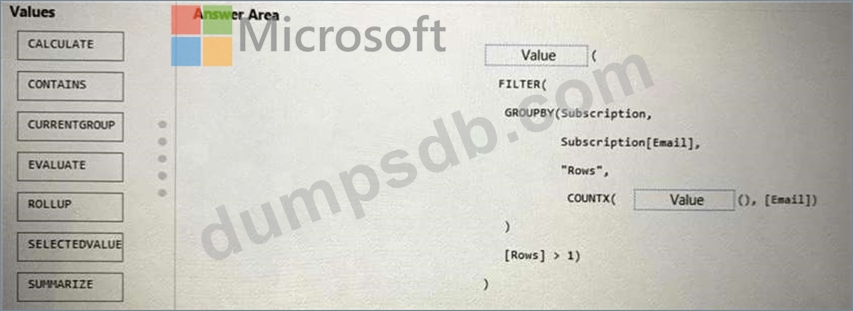

Question 56

You are using DAX Studio to query an XMLA endpoint.

You need to identify the duplicate values in a column named Email in a table named Subscription.

How should you complete the DAX expression? To answer, drag the appropriate values to the targets. Each

value may be used once, more than once. may need to drag the split bar between panes or scroll to view

content.

NOTE: Each correct selection is worth one point.

Question 57

You are using DAX Studio to analyze a slow-running report query. You need to identify inefficient join operations in the query. What should you review?

Question 58

You use Advanced Editor in Power Query Editor to edit a query that references two tables named Sales and

Commission. A sample of the data in the Sales table is shown in the following table.

A sample of the data in the Commission table is shown in the following table.

You need to merge the tables by using Power Query Editor without losing any rows in the Sales table.

How should you complete the query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

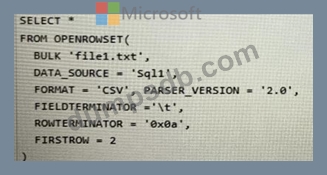

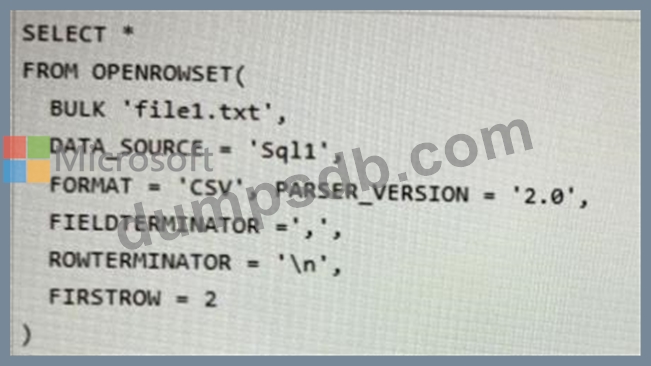

Question 59

You have a file named File1.txt that has the following characteristics:

* A header row

* Tab delimited values

* UNIX-style line endings

You need to read File1.txt by using an Azure Synapse Analytics serverless SQL pool.

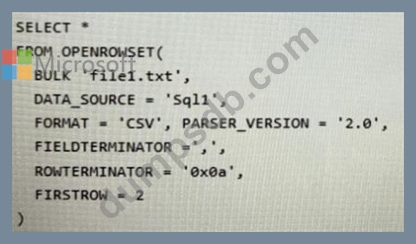

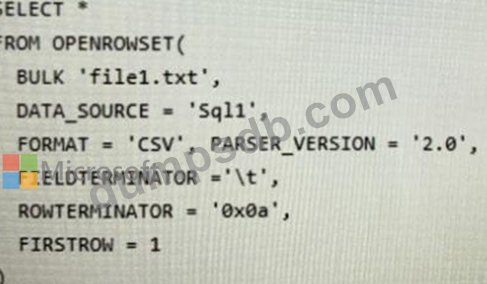

Which query should you execute?

A)

B)

C)

D)

Question 60

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

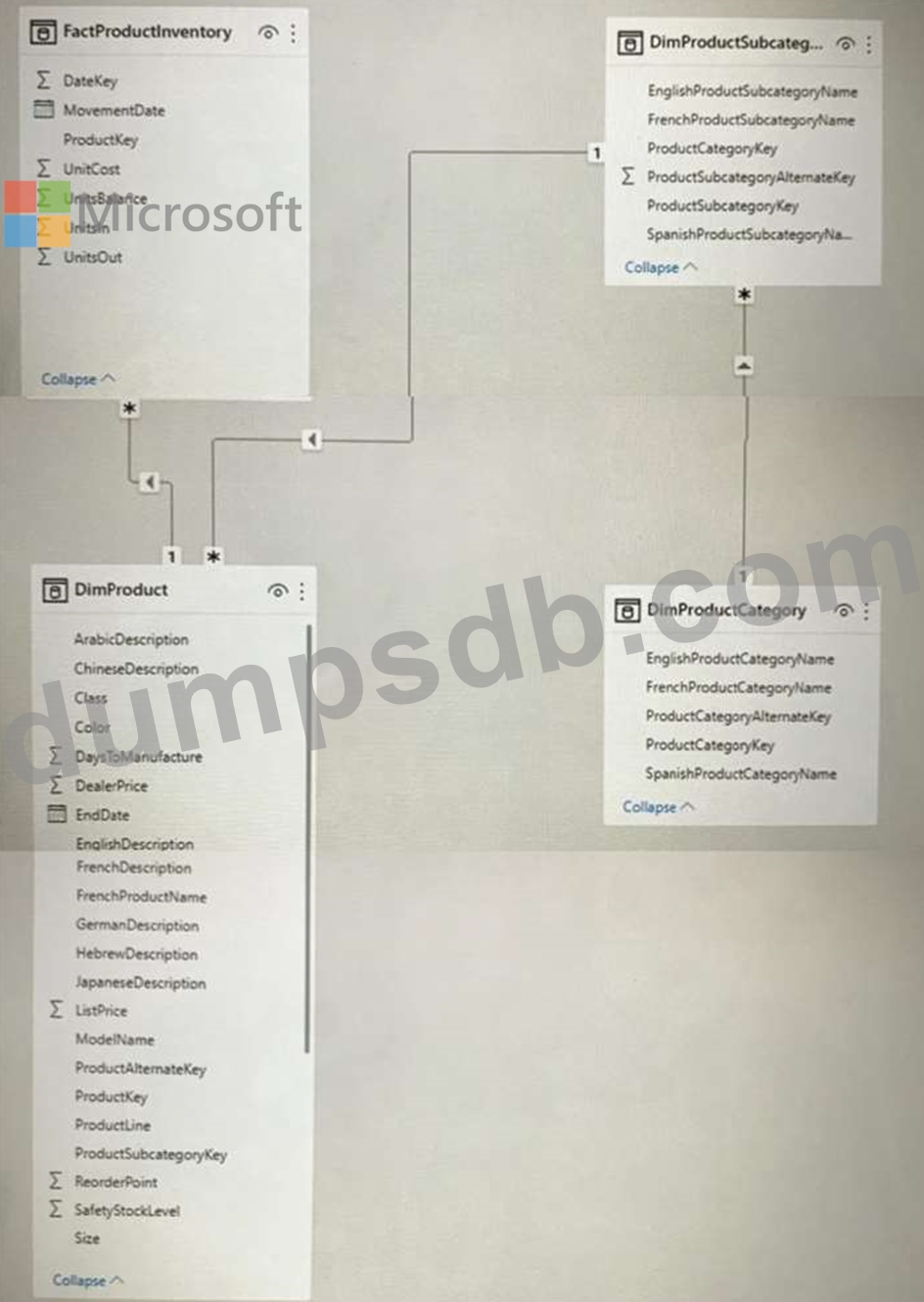

You have the Power Bl data model shown in the exhibit. (Click the Exhibit tab.)

Users indicate that when they build reports from the data model, the reports take a long time to load.

You need to recommend a solution to reduce the load times of the reports.

Solution: You recommend normalizing the data model.

Does this meet the goal?